Archive

Install Open Source VMware Tools on Red Hat Enterprise/CentOS/Scientific Linux 6

VMware now makes a repository available for us to install the VMware tools for a variety of Linux distributions including Red Hat, Scientific, CentOS, and Ubuntu. In this example I will install VMware tools on a Red Hat Enterprise/CentOS/Scientific Linux 6 guest running on a VMware ESXi 4.1 host.

First import the VMware repository GPG signing public keys:

# rpm --import http://packages.vmware.com/tools/keys/VMWARE-PACKAGING-GPG-DSA-KEY.pub

# rpm --import http://packages.vmware.com/tools/keys/VMWARE-PACKAGING-GPG-RSA-KEY.pub

Now add the VMware repository. If you’d like you can use the “echo” command below or simply create the file and its contents are listed below it. There are other packages available in the repository for other Linux distros, architectures, and ESX host versions. Again I am using the Red Hat Enterprise 6/VMware ESXi 4.1 version.

# echo -e "[vmware-tools]\nname=VMware Tools\nbaseurl=http://packages.vmware.com\

/tools/esx/4.1latest/rhel6/\$basearch\nenabled=1\ngpgcheck=1" > /etc/yum.repos.d\

/vmware-tools.repo

Now we can list the contents of the new repo file:

[root@server1 ~]# cat /etc/yum.repos.d/vmware-tools.repo

Here is what the contents should look like:

[vmware-tools]

name=VMware Tools

baseurl=http://packages.vmware.com/tools/esx/4.1latest/rhel6/$basearch

enabled=1

gpgcheck=1

It is now time to run the actual install of VMware tools. In my case I am installing on a server system without X11 graphical interface so this is the minimum install:

# yum -y install vmware-open-vm-tools-nox

If you are installing on a workstation or server with X11 installed and would like the VMware display adapter and mouse drivers loaded use this command. The install will be a bit bigger:

# yum -y install vmware-open-vm-tools

You are now up and running with VMware tools!

Configure NFS Server v3 and v4 on Scientific Linux 6 and Red Hat Enterprise Linux (RHEL) 6

Recently the latest version of Scientific Linux 6 was released. Scientific Linux is a distribution which uses Red Hat Enterprise Linux as its upstream and aims to be compatible with binaries compiled for Red Hat Enterprise. I am really impressed with the quality of this distro and the timeliness with which updates and security fixes are distributed. Thanks to all the developers and testers on the Scientific Linux team! Now let’s move on to configuring an NFS server on RHEL/Scientific Linux.

In my environment I will be using VMware ESXi 4.1 and Ubuntu 10.10 as NFS clients. ESXi 4.1 supports a maximum of NFS v3 so that version will need to remain activated. Fortunately it appears as though out of the box the NFS server on RHEL/Scientific Linux has support for NFS v3 and v4. Ubuntu 10.10 will by default use the NFSv4 protocol.

First make a directory to place the NFS export mount and assign permissions. Also open up write permissions on this directory if you’d like anyone to be able to write to it, be careful with this as there are security implications and anyone will be able to write that mounts the share:

# mkdir /nfs

# chmod a+w /nfs

Now we need to install the NFS server packages. We will include a package named “rpcbind”, which is apparently a newly named/implementation of the “portmap” service. Note that “rpcbind” may not be required to be running if you are going to use NFSv4 only, but it is a dependency to install “nfs-utils” package.

# yum -y install nfs-utils rpcbind

Verify that the required services are configured to start, “rpcbind” and “nfslock” should be on by default anyhow:

# chkconfig nfs on

# chkconfig rpcbind on

# chkconfig nfslock on

Configure Iptables Firewall for NFS

Rather than disabling the firewall it is a good idea to configure NFS to work with iptables. For NFSv3 we need to lock several daemons related to rpcbind/portmap to statically assigned ports. We will then specify these ports to be made available in the INPUT chain for inbound traffic. Fortunately for NFSv4 this is greatly simplified and in a basic configuration TCP 2049 should be the only inbound port required.

First edit the “/etc/sysconfig/nfs” file and uncomment these directives. You can customize the ports if you wish but I will stick with the defaults:

# vi /etc/sysconfig/nfs

RQUOTAD_PORT=875

LOCKD_TCPPORT=32803

LOCKD_UDPPORT=32769

MOUNTD_PORT=892

STATD_PORT=662

STATD_OUTGOING_PORT=2020

We now need to modify the iptables firewall configuration to allow access to the NFS ports. I will use the “iptables” command and insert the appropriate rules:

# iptables -I INPUT -m multiport -p tcp --dport 111,662,875,892,2049,32803 -j ACCEPT

# iptables -I INPUT -m multiport -p udp --dport 111,662,875,892,2049,32769 -j ACCEPT

Now save the iptables configuration to the config file so it will apply when the system is restarted:

# service iptables save

Now we need to edit “/etc/exports” and add the path to publish in NFS. In this example I will make the NFS export available to clients on the 192.168.10.0 subnet. I will also allow read/write access, specify synchronous writing, and allow root access. Asynchronous writes are supposed to be safe in NFSv3 and would allow for higher performance if you desire. The root access is potentially a security risk but AFAIK it is necessary with VMware ESXi.

# vi /etc/exports

/nfs 192.168.10.0/255.255.255.0(rw,sync,no_root_squash)

Configure SELinux for NFS Export

Rather than disable SELinux it is a good idea to configure it to allow remote clients to access files that are exported via NFS share. This is fairly simple and involves setting the SELinux boolean value using the “setsebool” utility. In this example we’ll use the “read/write” boolean but we can also use “nfs_export_all_ro” to allow NFS exports read-only and “use_nfs_home_dirs” to allow home directories to be exported.

# setsebool -P nfs_export_all_rw 1

Now we will start the NFS services:

# service rpcbind start

# service nfs start

# service nfslock start

If at any point you add or remove directory exports with NFS in the “/etc/exports” file, run “exportfs” to change the export table:

# exportfs -a

Implement TCP Wrappers for Greater Security

TCP Wrappers can allow us greater scrutiny in allowing hosts to access certain listening daemons on the NFS server other than using iptables alone. Keep in mind TCP Wrappers will parse first through “hosts.allow” then “hosts.deny” and the first match will be used to determine access. If there is no match in either file, access will be permitted.

Append a rule with a subnet or domain name appropriate for your environment to restrict allowable access. Domain names are implemented with a preceding period, such as “.mydomain.com” without the quotations. The subnet can also be specified like “192.168.10.” if desired instead of including the netmask.

vi /etc/hosts.allow

mountd: 192.168.10.0/255.255.255.0

Append these directives to the “hosts.deny” file to deny access from all other domains or networks:

vi /etc/hosts.deny

portmap:ALL

lockd:ALL

mountd:ALL

rquotad:ALL

statd:ALL

And that should just about do it. No restarts should be necessary to apply the TCP Wrappers configuration. I was able to connect with both my Ubuntu NFSv4 and VMware ESXi NFSv3 clients without issues. If you’d like to check activity and see the different NFS versions running simply type:

# nfsstat

Good luck with your new NFS server!

References:

http://www.cyberciti.biz/faq/centos-fedora-rhel-iptables-open-nfs-server-ports/

Cloned Red Hat/CentOS/Scientific Linux Virtual Machines and “Device eth0 does not seem to be present” Message

Recently I was preparing some new virtual machines in VMware running Scientific Linux 6. I encountered some difficulty with the virtual network interface after preparing clones of the machines. In particular I was unable to get the virtual NIC on the newly cloned machine to be recognized as a valid interface. Upon further investigation the NIC on the newly cloned machines was being registered as “eth1”. We can check the currently registered “eth” devices here:

[root@sl6 ~]# ls /sys/class/net

eth1 lo sit0

As it turns out there is a device manager for the Linux kernel named “udev” which remembers the settings from the NIC of the virtual machine before it was cloned. I was not familiar with udev because it was not installed in my previous Linux VM install, which were mainly CentOS 5.

Since the hardware address of the network interface changes as part of the clone, the system sees the NIC after the clone as “new” and assigns it to eth1. The simple way to move the interface back to eth0 is to edit the strings beginning with “SUBSYSTEM” in udev’s network persistence file.

Start off by removing the first “SUBSYSTEM” entry that represents the “old” eth0 interface. Then edit the second “SUBSYSTEM” entry, changing the “NAME” parameter from “eth1” to “eth0”. Of course your config may vary from mine. Keep in mind that the “SUBSYSTEM” line may be wrapped in the text below.

Old file:

[root@sl6 ~]# cat /etc/udev/rules.d/70-persistent-net.rules

# This file was automatically generated by the /lib/udev/write_net_rules

# program, run by the persistent-net-generator.rules rules file.

#

# You can modify it, as long as you keep each rule on a single

# line, and change only the value of the NAME= key.

# PCI device 0x15ad:0x07b0 (vmxnet3) (custom name provided by external tool)

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="00:50:56:87:00:21", ATTR{type}=="1", KERNEL=="eth*", NAME="eth0"

# PCI device 0x15ad:0x07b0 (vmxnet3)

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="00:50:56:87:00:25", ATTR{type}=="1", KERNEL=="eth*", NAME="eth1"

New file:

[root@sl6 ~]# cat /etc/udev/rules.d/70-persistent-net.rules

# This file was automatically generated by the /lib/udev/write_net_rules

# program, run by the persistent-net-generator.rules rules file.

#

# You can modify it, as long as you keep each rule on a single

# line, and change only the value of the NAME= key.

# PCI device 0x15ad:0x07b0 (vmxnet3) (custom name provided by external tool)

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="00:50:56:87:00:25", ATTR{type}=="1", KERNEL=="eth*", NAME="eth0"

Now verify that you have a properly configured network config file, the example below is for Red Hat/CentOS/Scientific Linux:

[root@sl6 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE="eth0"

BOOTPROTO="static"

HWADDR="00:50:56:87:00:25"

IPV6INIT="no"

IPV6_AUTOCONF="no"

NM_CONTROLLED="no"

ONBOOT="yes"

IPADDR="192.168.10.125"

NETMASK="255.255.255.0"

NETWORK="192.168.10.0"

BROADCAST="192.168.10.255"

Now reboot the system and the NIC should now be registered as eth0!

Set Up Windows 2003 R2 NFS Server for VMware ESXi Backups

Generally my preference is to use Linux as an NFS server. On the internet you will see frequent reference to the belief that NFS works better on Linux/UNIX. Recently I decided to try and set up NFS services on Windows to see how well it would perform. In this tutorial I will set up Services for UNIX 3.5 on a Windows 2003 R2 server and configure it using the User Name Mapping service to allow a VMware ESXi to use it as a datastore for VMs or backups via non-anonymous connections.

First off grab the Windows Services for UNIX (SFU) installation files here. Extract the files from the download file and run the setup.

Click Next.

Select Custom Installation and click Next.

Create VMware ESXi 4.1 USB Flash Boot Drive for White Boxes with Unsupported Network Cards

One of the convenient features of VMware ESXi 4.1 is the ability to use a USB flash drive as a boot device. While installing ESXi on supported server hardware is relatively easy, installing on unsupported hardware can require some maneuvering to get the installation to complete properly. In particular, the ESXi 4+ install needs to detect a supported network card to succeed. You can’t incorporate unsupported network drivers afterward like was possible with ESXi 3/3.5.

To get around these issues I will take advantage of the capability to install VMware ESXi inside a virtual machine. In this tutorial I will install ESXi 4.1 to a USB drive attached to a virtual machine running inside VMware Workstation 7.1. After the installation has succeeded I will attached the new USB boot drive to a CentOS Linux virtual machine (this tutorial assumes that you have one available) and add the network card drivers needed by my ESXi white box.

First inside VMware Workstation configure a new virtual machine.

Choose typical and click Next.

In this case I will use the ESXi 4.1 ISO as the installation media that can be obtained for free from VMware. Select the second option and click Browse.

Browse and select the ESXi ISO and click Open.

Click Next.

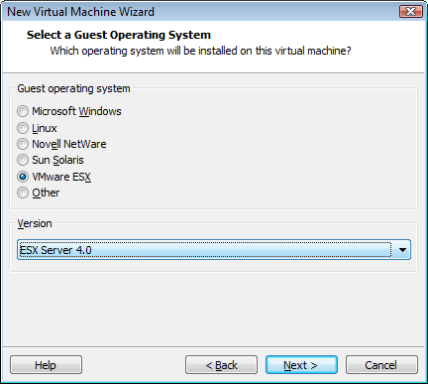

For Guest OS selection VMware ESX and choose the ESX Server 4.0 version.

Recent Comments